Getting Clients Involved in Testing

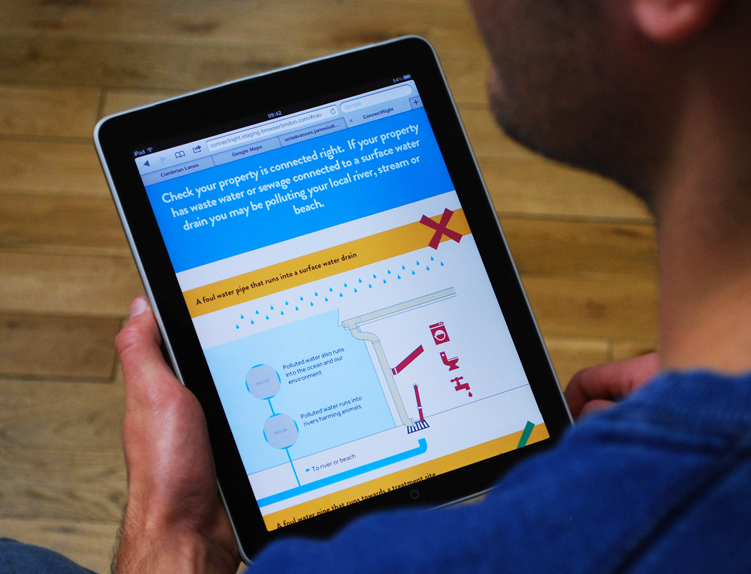

In 2009 the Environment Agency and National Misconnection Group’s ConnectRight campaign used a downloadable PDF to highlight the problem of water pollution in the UK.

Skip forward four years, and they’ve realised that a PDF just doesn’t work anymore – there’s only a snippet of time available to capture a user’s attention, and few have the patience to wait for a PDF to download. They decided that a campaign website was the best way to present the information and got in touch with us. We happily helped them out and learnt an important lesson along the way…

We like to build fast and test quickly. So, after two intense weeks of discovery phase workshops, we had reached a point where we had the opportunity to test our proposed solutions.

Using the information learnt from discovery, we set about inviting some end-users to participate in some A/B testing. We held these tests in our local area with people that fitted the target audience of the campaign. We presented them with two options and posed the question: Which is the easiest to understand?

After each test, we had an open and frank chat with our test subjects (a little more ‘off the cuff’ than usual) and asked them to feedback their experience of using the website. What we found is that in the relaxed environment we were able to get honest opinions about how end-users feel about the product – it felt a little less contrived than getting people to formally visit our studio.

Now in most cases, these quick-fire tests are carried out without the inclusion of the client. That’s the idea; quick fast feedback from people, face to face.

But what if your client wants to experience this open and honest feedback first-hand, so they can be sure the right decisions are made?

Well, you could invite them to tag along. While this sounds like a good idea at first there is a danger that the relaxed environment, where participants feel that they can give open and frank feedback, could be compromised if there are five people standing around observing them.

Instead, we decided to try encouraging our clients to try some testing for themselves. We gave them the necessary tools and instructions to take away and test people from the target demographic that they could persuade to participate over the course of two weeks.

A fortnight later the feedback we received appeared to align itself with our original findings. More importantly, though, we found that the team at the Environment Agency had a much better understanding of the reasoning behind the decisions that were made. They were able to see, first-hand, how end-users interpret and engage with the product. It helped them start to really get behind the design and trust in the decisions that were being made for future development. This shared experience proved to be key to the client’s buy-in to our proposed design.

This was the first time we tried and tested this collective approach, but based on the feedback in this instance I’m sure it won’t be the last. Throughout the year we’ll continue to encourage clients to participate on their own, allowing them get their hands dirty – and in doing so allowing them to fully understand the thought process behind the decisions made throughout the project lifecycle.