Reimagining the Semantic Web: UCL’s Innovative Synthesis of AI and Web Science

This article is co-written by Jon Johnson of University College London (UCL) and Browser London.

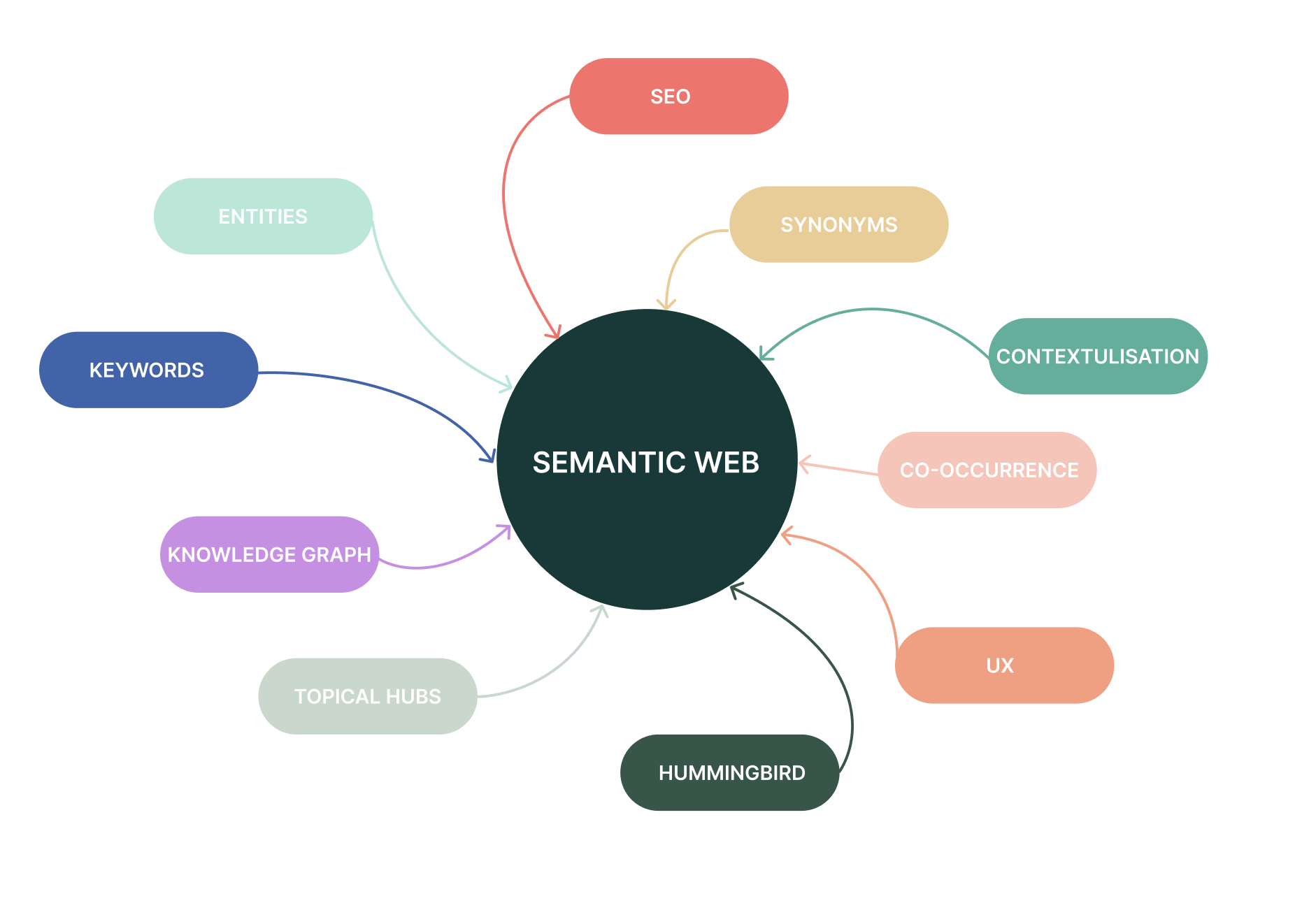

The Semantic Web: Weaving a Web of Meaning

Imagine the internet as a vast library where the current web is like a system of books placed on shelves with no particular order, searchable only by specific words or phrases. Now imagine a dream library where every piece of information is meticulously catalogued and interconnected based on its meaning and context. This dream library is the Semantic Web. This “web of meaning” is created through standardised ways of describing information (ontologies) and relationships between data points. Proposed by Tim Berners-Lee in the early 2000s, the vision was to create a web of data easily processed by machines, enabling computers to understand the meaning and context of information, not just its structure.

However the development of the Semantic Web hasn’t always aligned with its original academic and knowledge-sharing goals, often being co-opted for commercial purposes that prioritise quick results over deeper understanding. As Jon Johnson, a metadata specialist working at University College London observes:

“The semantic part of the machine learning world isn’t that interested in semantics or interests in the relationship between ideas and the ideation between objects. They’re more interested in leveraging precision to achieve a different objective, which I think isn’t necessarily what the Semantic Web was about.”

Challenges in Data Management and Analysis

Jon’s work at UCL involves managing and analysing complex survey metadata, often dealing with datasets that span decades and contain thousands of variables. This presents unique challenges, particularly when trying to compare and understand data across different time periods and cultural contexts.

“The challenge is actually about the manipulation of semantics,” Jon explained. “It’s more about managing the semantics and actually trying to work out whether a question someone asked in 1946 is comparable to a question someone else asked in 2025.”

This level of semantic complexity is often overlooked in current AI and machine learning approaches, which tend to focus on pattern recognition and prediction rather than deep semantic understanding. Jon argues that addressing these challenges requires a return to the principles of the Semantic Web, with a focus on building domain-specific models and improving the quality of training data.

Reclaiming the Web for Academic Purposes

“I think that whole approach, and we’re far from the only people doing this stuff, it’s about trying to reclaim the web for ideas, I suppose,” Jon stated. This sentiment echoes a growing concern among academics that the commercialisation of AI and machine learning has led to a focus on quick results and profit rather than deeper understanding and knowledge creation.

Jon emphasised the need to build models around the specific language used within academic domains to achieve higher levels of accuracy and understanding. While current models may achieve 80% accuracy, Jon argues that for academic purposes, this needs to be pushed into the 90s to be truly useful.

The Role of Open Standards and Transparency

One key aspect of reclaiming the Semantic Web for academic purposes is the promotion of open standards and transparency in data management. Jon highlighted the importance of initiatives like SDMX (Statistical Data and Metadata eXchange) in improving the quality of international statistics through mandatory standards and quality reporting.

However, he also noted the lack of incentives for adopting such standards in many areas.

“Fundamentally, there’s legislation or policy around these things that basically says you have to do something. It’s always optional, and therefore it doesn’t happen,” Jon explained.

To encourage the adoption of open standards and better metadata practices, Jon suggests a combination of legal, community, and cultural norms. “It’s about building either legal or community or cultural norms that make those technological solutions happen,” he stated.

Balancing Human and Machine Involvement

As AI and machine learning become more prevalent in data analysis, Jon emphasises the importance of maintaining transparency and human oversight.

“Once you lose that transparency, then I think you lose trust in the output from the process,” he warned.

Finding the right balance between human and machine involvement is crucial. Where AI tools assist in tasks like metadata tagging and pattern recognition, but human researchers maintain control over the overall direction and interpretation of research. This approach ensures that the analysis remains grounded in theory and maintains its relevance to the original research questions.

Browser have had the pleasure of working with UCL since 2014, and continue to support them with digital services. For more information about the work we do, take a look through our UCL blog archives.

Many thanks Jon Johnson of UCL, also thanks goes to Rabie Madaci, Alireza Attari, and Jantine Doornbos for the photos ❤️.